Flakiness information: Difference between revisions

No edit summary |

No edit summary |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

Overview of Qt test system can be found [https://wiki.qt.io/Qt_test_system here]. | |||

At the end of every week, we do some analytics and we prepare a list of the most failing flaky tests for a given week. | At the end of every week, we do some analytics and we prepare a list of the most failing flaky tests for a given week. | ||

Those lists are available on this flaky summary [https://testresults.qt.io/grafana/d/000000007/flaky-summary-ci-test-info-overview?orgId=1 dashboard]. | Those lists are available on this flaky summary [https://testresults.qt.io/grafana/d/000000007/flaky-summary-ci-test-info-overview?orgId=1 dashboard]. | ||

| Line 10: | Line 13: | ||

So far we identified several sources of flakiness: | So far we identified several sources of flakiness: | ||

flakiness rooted in source code | flakiness rooted in source code flakiness related to a particular operating system version on which it is run. There can be multiple reasons for it, often related to the abstraction layer between mouse events and windowing system-flakiness related to virtualization - most of the runs are executed on virtual machines. Not all virtualization mimics well enough intended hardware, those shortcomings lead to flakiness related to running environment settings - some tests are sensitive e.g. to slower-than-usual memory. | ||

flakiness related to a particular operating system version on which it is run. There can be multiple reasons for it, often related to the abstraction layer between mouse events and windowing system | |||

flakiness related to virtualization - most of the runs are executed on virtual machines. Not all virtualization mimics well enough intended hardware, those shortcomings lead to | '''Why reducing flakiness is important?''' | ||

flakiness related to running environment settings - some tests are sensitive | |||

Why reducing flakiness is important? | |||

Flaky tests are the reason for about 30% of failing integrations. They cause lots of frustration and deplete trust in tests because developers are not sure if tests fail for a reason, or because of instabilities. We rerun flaky tests up to 5 times - this rerun slows down integration and requires more power consumption. Most running software has some instabilities, the goal is to reduce it as much as is sensible and possible. | Flaky tests are the reason for about 30% of failing integrations. They cause lots of frustration and deplete trust in tests because developers are not sure if tests fail for a reason, or because of instabilities. We rerun flaky tests up to 5 times - this rerun slows down integration and requires more power consumption. Most running software has some instabilities, the goal is to reduce it as much as is sensible and possible. | ||

| Line 26: | Line 28: | ||

To monitor the stability of CI successful qt5 integrations are re- | '''What are health-checks integrations? ''' | ||

To monitor the stability of CI successful qt5 integrations are re-run. The last successful qt5 integration together with its submodules in corresponding versions - is re-run at night to check how stable is CI. Those integrations called "HEALTHCHECKS" demonstrated to contain to passing, working code - however due to CI instabilities they sometimes fail when re-run. We collect this information and label it as "CI flakiness" (CI instabilities) and we add it to flaky failed statistics | |||

| Line 34: | Line 38: | ||

Insignificant runs (work items) are defined for selected platforms. They are "allowed to fall", their falls do not impact (stop) integration. An insignificant platform run does not cause Gerrit stage to fail even if it fails. | Insignificant runs (work items) are defined for selected platforms. They are "allowed to fall", their falls do not impact (stop) integration. An insignificant platform run does not cause Gerrit stage to fail even if it fails. | ||

'''What is a platform?''' | '''What is a platform?''' | ||

Qt library is multi-module and cross-platform. Often, but not always qt modules are built on what we call host platform, but intended to run and test on what we call the target platform. In most cases, the platform is defined by the version of the operating system (e.g. Mac OS 12, or Ubuntu 20), processor architecture ( | Qt library is multi-module and cross-platform. Often, but not always qt modules are built on what we call host platform, but intended to run and test on what we call the target platform. In most cases, the platform is defined by the version of the operating system (e.g. Mac OS 12, or Ubuntu 20), processor architecture (e.g. Intel x86_32 bits or x86_64 bits, ARM 64, etc. ), and compiler (e.g. clang, gcc, msvc etc.) . Additionally, extra features can be defined, and a subset of tests can be run. | ||

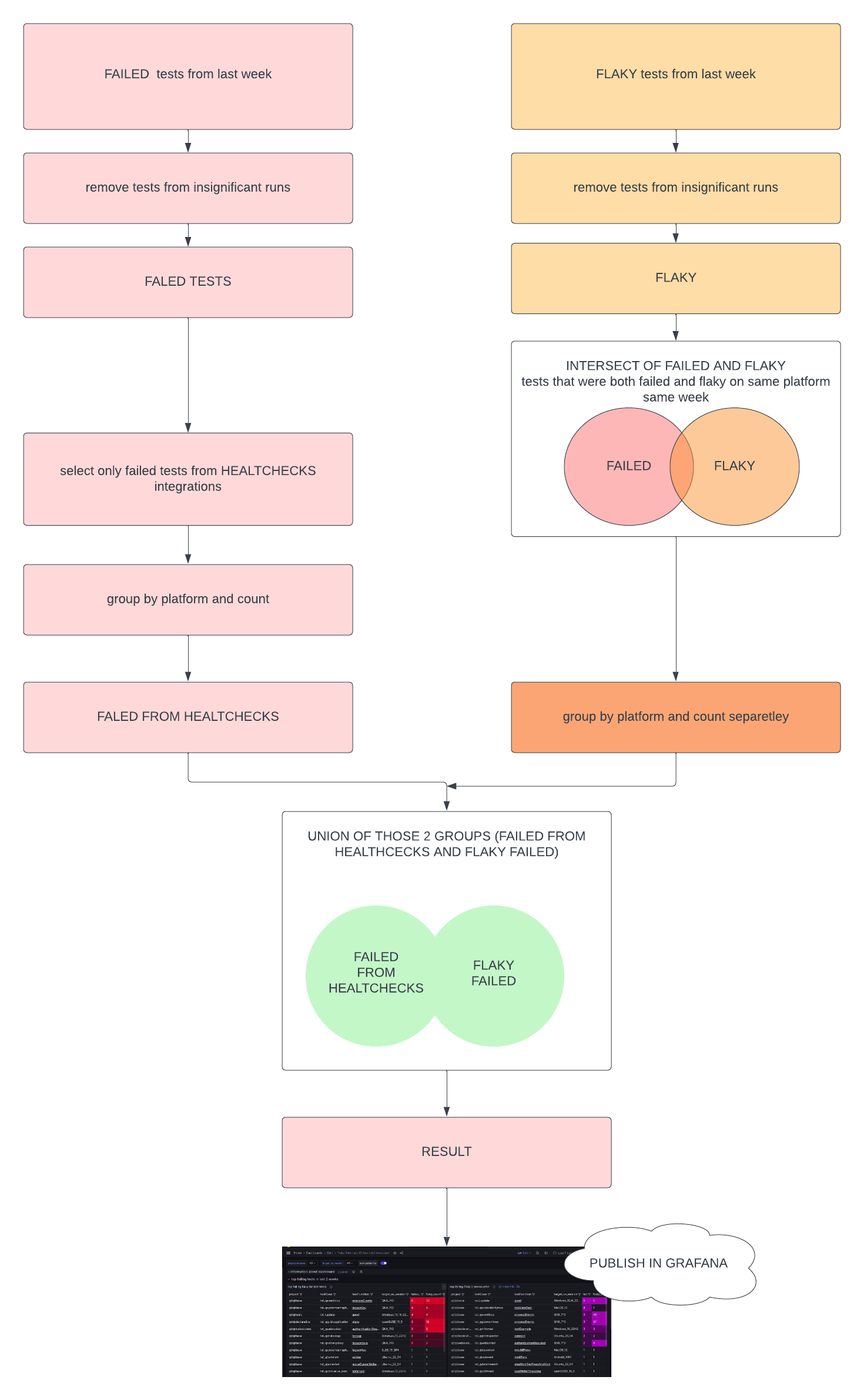

The diagram explaining visually the data process: | The diagram explaining visually the data process:<br /> | ||

<br /> | [[File:FLAKY FAILED STATS.png|thumb|diagram of counting flakiness statistics|alt=|center|2381x2381px]] | ||

[[File:FLAKY FAILED STATS.png|thumb|diagram of counting flakiness statistics|alt=|center]] | |||

<br /> | <br /> | ||

Latest revision as of 12:29, 24 November 2023

Overview of Qt test system can be found here.

At the end of every week, we do some analytics and we prepare a list of the most failing flaky tests for a given week.

Those lists are available on this flaky summary dashboard.

Why flakiness happen?

Flakiness is an umbrella term for tests and build instabilities. In an ideal scenario, if we run compiled code n times, it should provide exactly the same n results. In the real world, we receive different results, and the impact is particularly important when those are test results in integrations.

So far we identified several sources of flakiness:

flakiness rooted in source code flakiness related to a particular operating system version on which it is run. There can be multiple reasons for it, often related to the abstraction layer between mouse events and windowing system-flakiness related to virtualization - most of the runs are executed on virtual machines. Not all virtualization mimics well enough intended hardware, those shortcomings lead to flakiness related to running environment settings - some tests are sensitive e.g. to slower-than-usual memory.

Why reducing flakiness is important?

Flaky tests are the reason for about 30% of failing integrations. They cause lots of frustration and deplete trust in tests because developers are not sure if tests fail for a reason, or because of instabilities. We rerun flaky tests up to 5 times - this rerun slows down integration and requires more power consumption. Most running software has some instabilities, the goal is to reduce it as much as is sensible and possible.

How did we get flaky and failed numbers?

Each integration result run on CI (continuous integration system, coin) is stored in a database. We process stored information and display it in a tool called Grafana.

Below simplified view is presented (scroll down), with information relevant to developers. Note, that the actual process is more complex and is not presented here.

What are health-checks integrations?

To monitor the stability of CI successful qt5 integrations are re-run. The last successful qt5 integration together with its submodules in corresponding versions - is re-run at night to check how stable is CI. Those integrations called "HEALTHCHECKS" demonstrated to contain to passing, working code - however due to CI instabilities they sometimes fail when re-run. We collect this information and label it as "CI flakiness" (CI instabilities) and we add it to flaky failed statistics

What are insignificant runs?

Insignificant runs (work items) are defined for selected platforms. They are "allowed to fall", their falls do not impact (stop) integration. An insignificant platform run does not cause Gerrit stage to fail even if it fails.

What is a platform?

Qt library is multi-module and cross-platform. Often, but not always qt modules are built on what we call host platform, but intended to run and test on what we call the target platform. In most cases, the platform is defined by the version of the operating system (e.g. Mac OS 12, or Ubuntu 20), processor architecture (e.g. Intel x86_32 bits or x86_64 bits, ARM 64, etc. ), and compiler (e.g. clang, gcc, msvc etc.) . Additionally, extra features can be defined, and a subset of tests can be run.

The diagram explaining visually the data process: