QtCS2024 Flaky: Difference between revisions

(Put the details I prepared in advance into this page, so they're not lost.) |

m (→Status quo: The * was a reference to two labels in the SVG, not a bullet point.) |

||

| Line 29: | Line 29: | ||

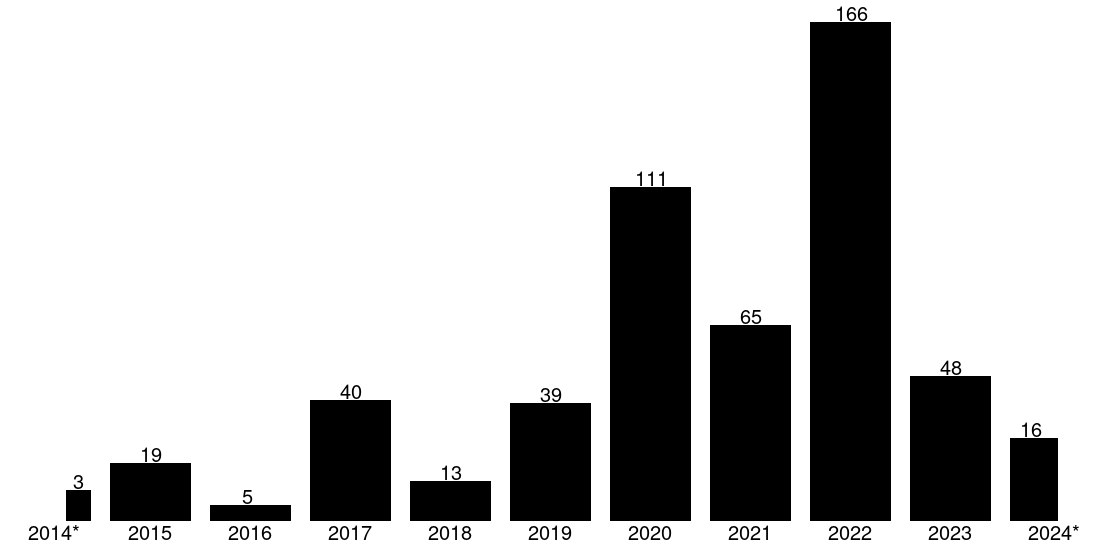

Among test functions, that are currently being run by Coin, how many (that still are) were blacklisted in each year since the feature was introduced ? | Among test functions, that are currently being run by Coin, how many (that still are) were blacklisted in each year since the feature was introduced ? | ||

[[File:FlakyHistogram.svg]] | [[File:FlakyHistogram.svg]] | ||

* 2014 and 2024 are incomplete years, here rescaled to keep area proportional to number, with width rescaled to the relevant fraction of the year. | |||

<kbd>*</kbd> 2014 and 2024 are incomplete years, here rescaled to keep area proportional to number, with width rescaled to the relevant fraction of the year. | |||

These results come from nightly health-check runs that do run all tests of every module in Qt5, using the SHA1s from the last successful Qt5 submodule update. See here for recent data. (Note that counting results from normal integrations would bias the data to the tests run earlier in each module, as most test-runs abort on first failure.) | These results come from nightly health-check runs that do run all tests of every module in Qt5, using the SHA1s from the last successful Qt5 submodule update. See here for recent data. (Note that counting results from normal integrations would bias the data to the tests run earlier in each module, as most test-runs abort on first failure.) | ||

Revision as of 13:48, 10 September 2024

We currently blacklist flaky tests, which means real failures they might have brought to our attention can get ignored. This should be a temporary state, but some have been blacklisted for many years. Each should be associated with a Jira ticket that won't be closed until the test is fixed and blacklisting removed; it's not clear that's always followed, though. How can we improve on this situation ? See also: QTQAINFRA-6400 and QTBUG-96295.

Details Eddy Prepared in Advance

Ignoring flaky test results

In September 2014, for Qt 5.4, Lars Knoll added support to QtTest for blacklisting tests. We already had QEXPECT_FAIL() for known bugs and QSKIP() for when a precondition of being able to do a given test isn't met, but we had some tests that should have passed, and sometimes did, but that also sometimes failed, on at least some platforms, without any predictable justification that could be encoded as the condition on a QSKIP() or QEXPECT_FAIL(). We describe these as flaky tests.

Marking a flaky test as blacklisted tells the test system to ignore its results. Such marking can be made specific to a particular platform – identified by compiler, operating system, hardware or any combination of these, complete (in at least some cases) with version details – and even limited by whether we're testing a developer build and/or on Coin (or with any particular value for QTEST_ENVIRONMENT set in its environment). The results are still reported, as BPASS or BFAIL, but QtTest won't fail the test-run based on a failure of the ignored test.

The problem with ignoring a test's result is that the test is there to catch the possible (re-)introduction of a bug; and it won't if we ignore its results. This would, if we looked for it, show up as a transition from both BPASS and BFAIL showing up as test results to only the latter showing up. The opposite transition, that would flag that we could resume paying attention to the test's results, will also escape our attention. See QTQAINFRA-6519, which includes two sightings of always-failing tests.

We sporadically make efforts to clean up flaky tests, but many of them have remained ignored for large fractions of the decade, up to and including all of it, that this option has been available.

So what should our policy on blacklisting be ?

Existing (lack of) policy

To the extent that we have a policy, it's in a big comment near the top of qtbase/src/testlib/qtestblacklist.cpp in which various of us have documented how the system works. While this steers shy of Commandments, it does encourage identifying the reason for each entry in a comment, using bug-IDs where available, preferably on the lines that indicate each configuration in which to ignore the test. (It can be hard to tell the scope of a standalone comment.)

In reviews, I encourage folk to use, in the comment, the ID of a bug that shall remain open (albeit possibly with its priority lowered) when the test's failures are being ignored, to encourage folk to come back and actually fix the issue, rather than merely muting its symptoms. Sadly, the overwhelming majority of entries in BLACKLIST files are uncommented, or say nothing more useful than # flaky (which is a fatuous thing to say in these files).

TTLOFIR now includes my thoughts on how test-suppression should be done, based (somewhat loosely) on what the QtTest best practice guide says on the subject (but then, I also wrote that).

Status quo

Take all metrics with a pinch of salt.

We currently have 1900 non-empty lines – of which 287 are pure-comment lines, 745 identify tests and 868 identify configurations in which to ignore those – in 219 BLACKLIST files. 37 of those lines, in two files, are QtTest's own self-tests of the suppression feature, so don't really count. Of the 279 lines with bug-ID comments, five are standalone QTQAINFRA comments, 235 are standalone QTBUG comments and 39 have a QTBUG comment following a configuration (as recommended by the documentation). Of the two QTQAINFRA bugs noted in comments, one has been closed, the other is in NMI. There are 180 distinct QTBUG IDs. Given how much smaller this is than the 868 configurations specified, I am fairly confident many flaky failures are not tracked in Jira.

We currently (Summer 2024) ignore about one test in 40 of those run by Coin (around 560 out of 22 thousand; see QTQAINFRA-6481). This proportion has remained fairly stable as the number of tests to run grows. Every ignored test is a piece of technical debt. Typical tests are run O(80k) times per year (well above that for qtbase and qtdeclarative), so we're wasting energy running tests when we ignore their results.

Among test functions, that are currently being run by Coin, how many (that still are) were blacklisted in each year since the feature was introduced ?

* 2014 and 2024 are incomplete years, here rescaled to keep area proportional to number, with width rescaled to the relevant fraction of the year.

These results come from nightly health-check runs that do run all tests of every module in Qt5, using the SHA1s from the last successful Qt5 submodule update. See here for recent data. (Note that counting results from normal integrations would bias the data to the tests run earlier in each module, as most test-runs abort on first failure.)

Notes

- Can we delete blacklisted *tests* with an age older than X years? No, because:

- They might work on other platforms

- BLACKLISTS provides intelligence: They can e.g. give an indication to which areas there are problems in Qt.

- Ideally, nothing should be blacklisted. Unfortunately we don't live in an ideal world with deadlines or CI integration that blocks progress for many contributors.

- There are similar tools available:

- QSKIP (used instead of BLACKLIST if the test can cause crashes)

- QEXPECT_FAIL (if you *know* the test fails)

- BLACKLIST (for e.g. flaky tests)

Blacklisted tests should be run. We need to monitor their state over time (is it fixed?)

Every time a test is blacklisted, it should be accompanied with a JIRA issue (maybe gerrit bot can require a QTBUG-xxxxx)

We have a page that explains what we can do to reduce flakiness of a test.

Use "ci" blacklist tag to specify that it is a problem in CI only.